Filter PySpark DataFrame Column with None Value in Python (3 Examples)

In this tutorial, I’ll show how to filter a PySpark DataFrame column with None values in the Python programming language.

The table of content is structured as follows:

- Introduction

- Creating Example Data

- Example 1: Filter DataFrame Column Using isNotNull() & filter() Functions

- Example 2: Filter DataFrame Column Using filter() Function

- Example 3: Filter DataFrame Column Using selectExpr() Function

- Video, Further Resources & Summary

Let’s dive into it!

Introduction

PySpark is a free and open-source software that is used to store and process data by using the Python Programming language.

We can create a PySpark object by using a Spark session and specifying an app name by using the getorcreate() method.

SparkSession.builder.appName(app_name).getOrCreate() |

SparkSession.builder.appName(app_name).getOrCreate()

After creating the data set with a list of dictionaries, we also have to pass the data object to the createDataFrame() method. Once we have done this, a PySpark DataFrame is created.

spark.createDataFrame(data) |

spark.createDataFrame(data)

In the next step, we may display the DataFrame using the show() function as shown below:

dataframe.show() |

dataframe.show()

So far, so good – let’s move on to the example data creation.

Creating Example Data

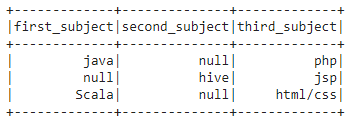

In this example, we’ll create a DataFrame object from a list of dictionaries with three rows and three columns, containing student subjects along with None values. We can print the DataFrame by using the show() method:

# import the pyspark module import pyspark # import the sparksession from pyspark.sql module from pyspark.sql import SparkSession # creating sparksession and then give the app name spark = SparkSession.builder.appName('statistics_globe').getOrCreate() #create a dictionary with 3 pairs with 3 values each #inside a list data = [{'first_subject': 'java', 'second_subject': None, 'third_subject': 'php'}, {'first_subject': None, 'second_subject': 'hive', 'third_subject': 'jsp'}, {'first_subject': 'Scala', 'second_subject': None, 'third_subject': 'html/css'}] # creating a dataframe from the given list of dictionary dataframe = spark.createDataFrame(data) # display the final dataframe dataframe.show() |

# import the pyspark module import pyspark # import the sparksession from pyspark.sql module from pyspark.sql import SparkSession # creating sparksession and then give the app name spark = SparkSession.builder.appName('statistics_globe').getOrCreate() #create a dictionary with 3 pairs with 3 values each #inside a list data = [{'first_subject': 'java', 'second_subject': None, 'third_subject': 'php'}, {'first_subject': None, 'second_subject': 'hive', 'third_subject': 'jsp'}, {'first_subject': 'Scala', 'second_subject': None, 'third_subject': 'html/css'}] # creating a dataframe from the given list of dictionary dataframe = spark.createDataFrame(data) # display the final dataframe dataframe.show()

This table shows our example DataFrame that we will use in the present tutorial. As you can see, it contains three columns that are called first_subject, second_subject, and third_subject with None values.

Let’s remove those None values from the existing PySpark DataFrame!

Example 1: Filter DataFrame Column Using isNotNull() & filter() Functions

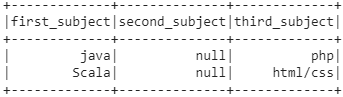

This example uses the filter() method followed by isNotNull() to remove None values from a DataFrame column.

The isNotNull() method checks the None values in the column. In case None values exist, it will remove those values.

dataframe.filter(dataframe.column_name.isNotNull()) |

dataframe.filter(dataframe.column_name.isNotNull())

In this example, we are filtering None values in the first_subject column.

Have a look at the Python code below:

#using is NotNull() with filter method dataframe.filter(dataframe.first_subject.isNotNull()).show() |

#using is NotNull() with filter method dataframe.filter(dataframe.first_subject.isNotNull()).show()

Table 2 shows the output of the previous syntax. As you can see, only the rows without None values in the first column have been kept.

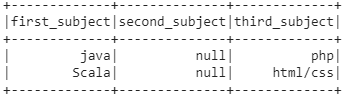

Example 2: Filter DataFrame Column Using filter() Function

This example uses the filter() method along with the “is” membership operator and the NOT NULL command to remove None values.

dataframe.filter("column_name is Not NULL") |

dataframe.filter("column_name is Not NULL")

In this specific example, we are going to remove None values from the first_subject column once again:

#using is NOT NULL operator #with filter method dataframe.filter("first_subject is Not NULL").show() |

#using is NOT NULL operator #with filter method dataframe.filter("first_subject is Not NULL").show()

The output table that we have created in this example is the same as in Example 1. However, this time we have applied a different method.

Example 3: Filter DataFrame Column Using selectExpr() Function

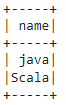

In this example, we are using the selectExpr() method, which is called as a select expression to select a DataFrame column excluding None values.

This expression is used as a keyword to get the column as a new column by using aliases.

dataframe.selectExpr("column_name as new_column_name") |

dataframe.selectExpr("column_name as new_column_name")

In this specific example, we are going to remove None values from the first_subject column and display its column name as “name” column.

#get rid of None value from first_subject column #and rename to name dataframe.selectExpr("first_subject as name").show() |

#get rid of None value from first_subject column #and rename to name dataframe.selectExpr("first_subject as name").show()

Video, Further Resources & Summary

Do you need more explanations on how to filter None Values? Then you may have a look at the following YouTube video of the Knowledge Sharing YouTube channel.

In this video tutorial, the speaker shows how to handle Null values in a PySpark DataFrame.

By loading the video, you agree to YouTube’s privacy policy.

Learn more

Also, you may have a look at some other Python PySpark articles on the Data Hacks website:

- Add New Column to PySpark DataFrame in Python

- Change Column Names of PySpark DataFrame in Python

- Concatenate Two & Multiple PySpark DataFrames

- Convert PySpark DataFrame Column from String to Double Type

- Convert PySpark DataFrame Column from String to Int Type

- Display PySpark DataFrame in Table Format

- Export PySpark DataFrame as CSV

- groupBy & Sort PySpark DataFrame in Descending Order

- Import PySpark in Python Shell

- Python Programming Tutorials

This page has shown how to delete None values from a PySpark DataFrame in the Python programming language. In case you have any additional questions, you may leave a comment in the comments section below.

This article was written in collaboration with Gottumukkala Sravan Kumar. You may find more information about Gottumukkala Sravan Kumar and his other articles on his profile page.